Most outdoor robotics programs do not fail at inference time.

They fail during ML preparation. Benchmark accuracy may look acceptable but

operational alignment often is not.

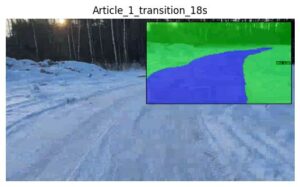

Outdoor systems operate under seasonal shifts, low-contrast terrain, motion-induced blur, camera-specific optics, ambiguous boundaries between traversable and non-traversable zones

If training and validation do not reflect these conditions, deployment risk is already embedded in the model.

To support structured evaluation under real operating conditions, I’ve made AGVScanner publicly available.

AGVScanner allows teams to:

• run their own models

• run publicly available models

• deploy user-defined segmentation or detection models

• evaluate behavior on real outdoor video, streams, or live camera

• compare generic and environment-aligned approaches

It enables structured comparison between benchmark performance and real-world stability.

GitHub: https://lnkd.in/dWh7BBdA

If you are preparing perception for an outdoor autonomous platform and want to structure the ML preparation phase around operational reality – rather than benchmark assumption: I’m open to collaborating at that stage.

Deployment-grade perception begins during preparation.